Using Automation to Meet and Exceed Quality Standards in Modeling and Simulation

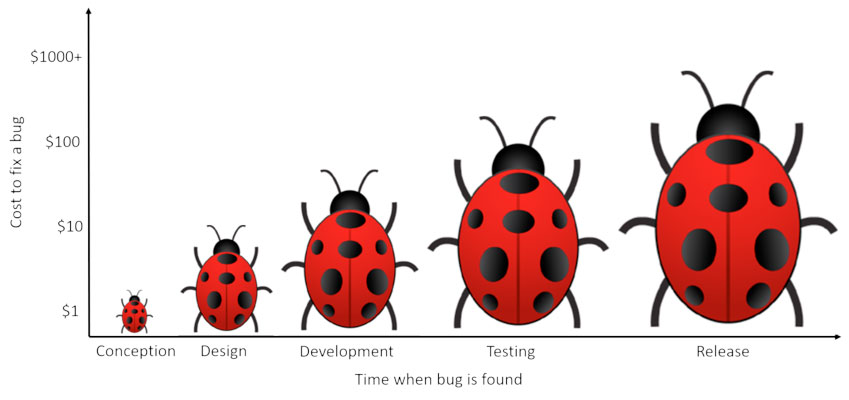

Developing models and libraries for both a single tool as well as multiple tools and even cross-testing (compilation and simulation performed on different tools) is an intricate and complicated task. Some estimate that anywhere from 15-50 errors might be induced in 1000 lines of code. Most of these errors or bugs are ‘silent bugs’ i.e. they do not cause any crashes or compilation failures, but they render unexpected behavior in the models. If design decision for hardware are based on simulation, faulty simulation results can cause large delays and excessive costs.

To catch these bugs early in the development stage, regression tests must be set-up and run after each change is made to the model base code. A standard regression test for simulation models consists of comparing a set of result trajectories against a known ‘golden’ result.

Modelon’s Model Testing Toolkit is a testing automation tool designed to expose model performance irregularities early in the development and testing cycle. With an intuitive graphical user interface and conversion scripting capability, Model Testing Toolkit ensures models of any size are tested thoroughly and accurately, giving modeling engineers the confidence to move their product from development to production.

To illustrate the benefits of the Model Testing Toolkit as a quality assurance testing automation tool, this blog will use the Buildings Library from the Lawrence Berkeley National Laboratory for test data.

Simple test case setup

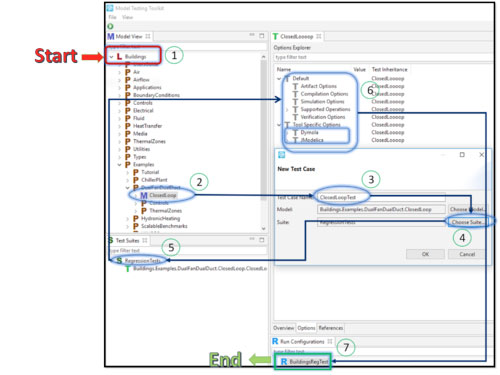

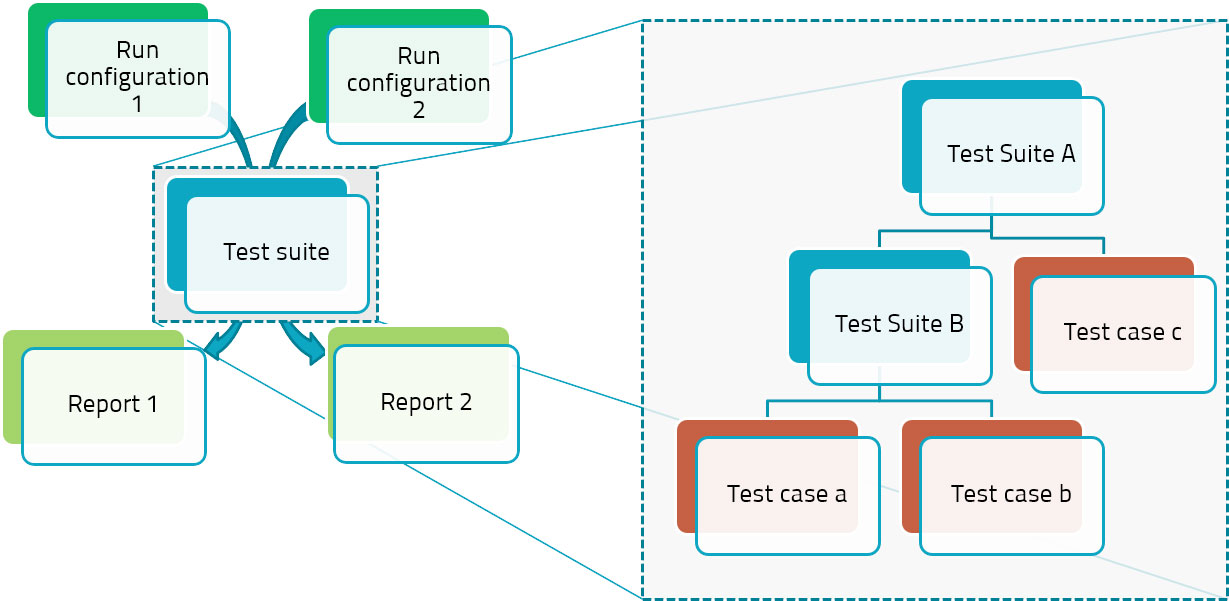

Any developing library needs to have a tight coupling between development and testing to ensure quality. The quality assurance testing begins by loading the Buildings Library into MTT and choosing an example mode to be tested. We then create a test case “ClosedLoopTest” for this model and add it to a test suite “RegressionTests“. A test suite is a collection of test cases or lower level test suites that allows the user to create hierarchical tests (Figure 3). It can also be used to store common default options for all test suites and test cases contained in a test suite.

Next, we need to feed the simulation and compilation options for the model to MTT. The compilation options are a list of options to use while compiling, i.e. arguments passed to the compiler at the ‘compile’ call. Simulation options are a list of options to pass on to the simulator upon creation, i.e. arguments to the simulator ‘constructor’. These can be set in various levels in MTT i.e. they can be set either in the test suites to store common default options for all test suites and test cases in it or they can be set individually for each test case.

Figure 2: Modelica test packages can be directly loaded along with their dependent libraries into the MTT GUI for creating test suites. It is also possible to load the Library itself and create test cases from it.

Once we’ve defined the simulation and compilation options for the model, it’s time to execute our test suite with a run configuration. To execute our test suite, a run configuration “BuildingRegTest” is needed. A run configuration contains all information on which test suites should be run and how to run them. The same test suite can be run by multiple run configurations, for example to test different tools or options as illustrated in the left of Figure 3. Here we set our compilation and simulation tool to be used in Modelon’s OPTIMICA Compiler Toolkit.

Figure 3: Hierarchy of test creation

Auto-creation of test suites

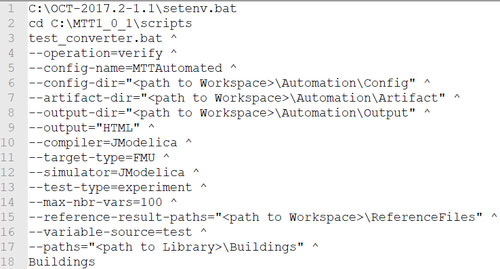

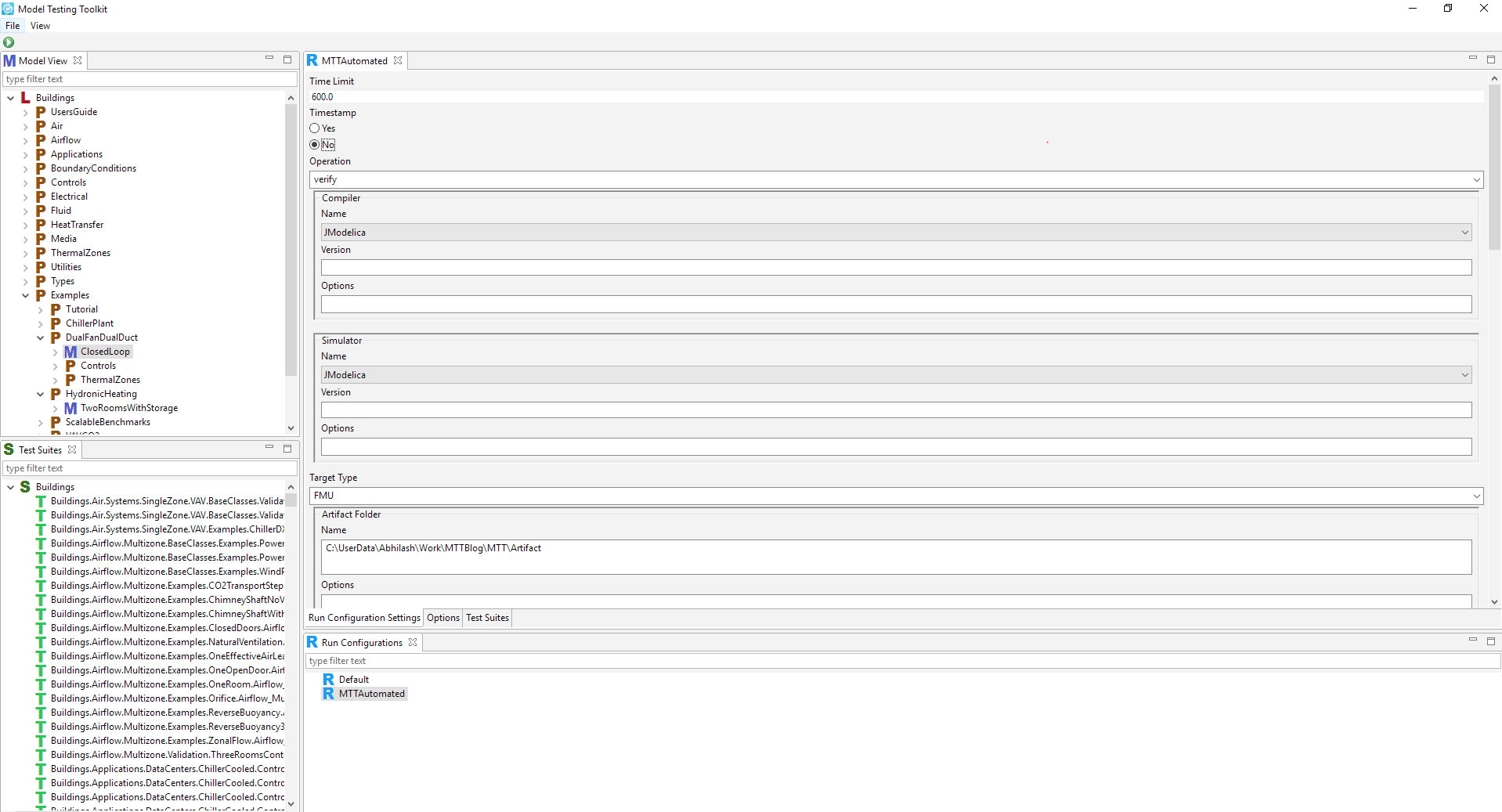

As the libraries grow during the development stages, the need for having enough test cases to capture the dynamics of various models grows. To lighten the burden on the user, MTT offers conversion scripts for quick generation of test suites directly from Modelica code. The script reads the simulation settings stored in the experiment annotation in the Modelica code and stores this information in the test cases. A script to automate the creation of test suites is shown in Figure 4. The execution of this script creates a test suite named ‘Buildings’. The script then browses through the Buildings Library to look for models with an experiment annotation in them and automatically creates test cases for these models. A run configuration “MTTAutomated” is also created which stores the details of the tool, model dependencies, artifact, and the result and output directories. The reference mat files if given are converted to csv files and stores in the test case data files. The generated test suites and run configuration can be loaded back in MTT as shown in Figure 5.

Figure 4: Script for automated testing

Figure 5: Loaded the auto created test suites in MTT

Continuous simulation

Once the tests are set up in MTT, we can put them for cross-testing in FMI compliant tools. Presently, MTT is compliant with Matlab, Dymola, Open Modelica and JModelica(OCT). It is also possible to set separate tools for compilation and simulation of the models in case the compilation tool generated an FMU. These inputs can be stored in a run configuration and reused for running different test suites with the same dependency.

Verification

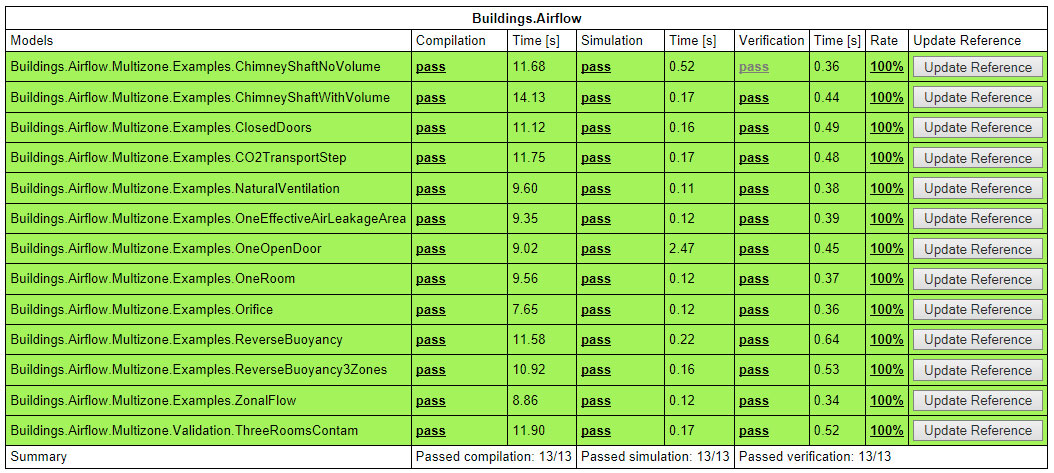

MTT supports verification of the results against a known reference. The variable to be compared can be set in the test cases. The result comparison tool by the Modelica Association is used for trajectory verification in MTT. The tool generates a neat html report with the comparison of the result trajectories with the reference as shown in Figure 6. The settings of the error tolerance of the tube dictates the pass percentage of the results. Single Point Verifier is used automatically when the start time is equal to the stop time. The only option as of now is ssv which is a simple verifier for steady-state verification.

Figure 6: Test report

In this blog we looked at how the Model Testing Toolkit enables continuous testing for increased model quality. In a subsequent blog, we’ll examine how the Model Testing Toolkit integrates with continuous integration platforms, such as Jenkins.

To learn more about the additional capabilities and integrations of MTT, visit our Model Testing Toolkit product page.

To talk to an expert on how Model Testing Toolkit can help you with model quality assurance testing, contact us here.